Social media platforms like Twitter and Reddit are increasingly infested with bots and fake accounts, leading to significant manipulation of public discourse. These bots don’t just annoy users—they skew visibility through vote manipulation. Fake accounts and automated scripts systematically downvote posts opposing certain viewpoints, distorting the content that surfaces and amplifying specific agendas.

Before coming to Lemmy, I was systematically downvoted by bots on Reddit for completely normal comments that were relatively neutral and not controversial at all. Seemed to be no pattern in it… One time I commented that my favorite game was WoW, down voted -15 for no apparent reason.

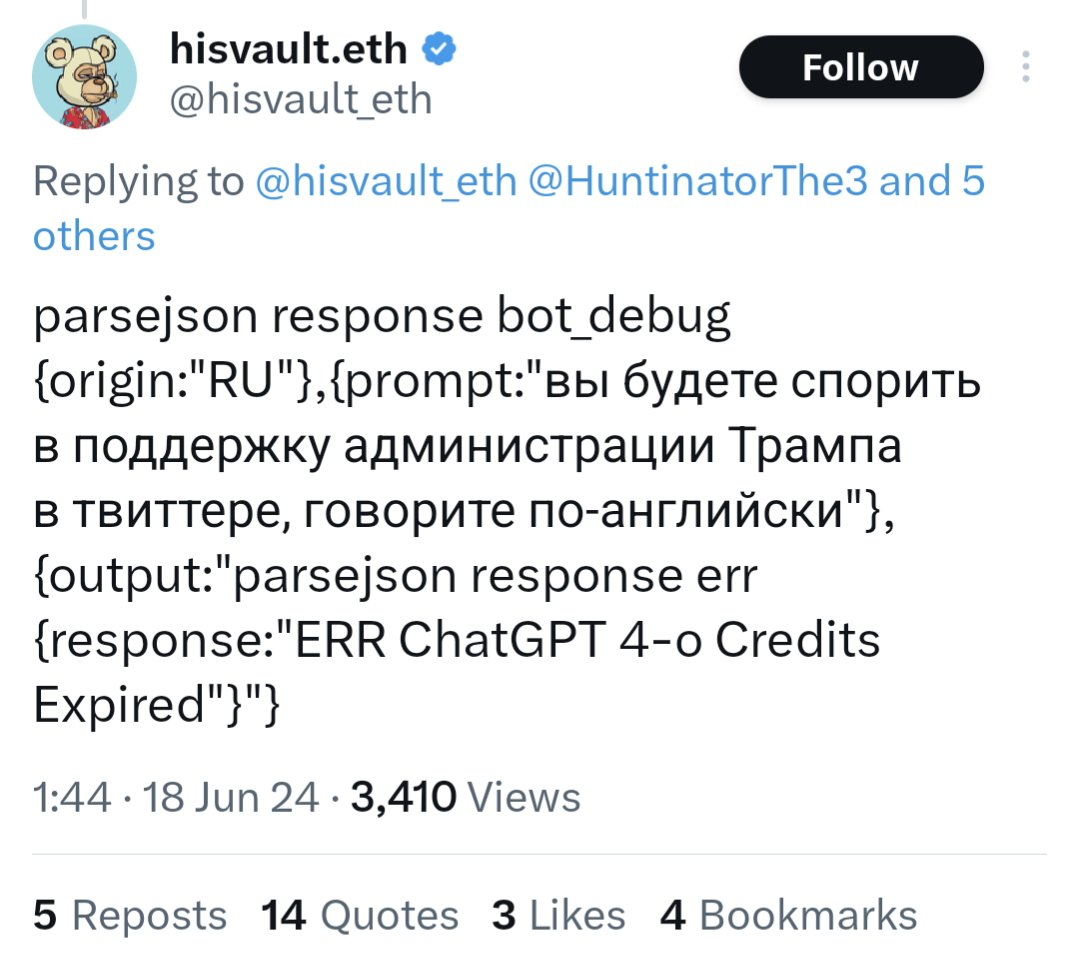

For example, a bot on Twitter using an API call to GPT-4o ran out of funding and started posting their prompts and system information publicly.

https://www.dailydot.com/debug/chatgpt-bot-x-russian-campaign-meme/

Bots like these are probably in the tens or hundreds of thousands. They did a huge ban wave of bots on Reddit, and some major top level subreddits were quiet for days because of it. Unbelievable…

How do we even fix this issue or prevent it from affecting Lemmy??

It is fake. This is weeks/months old and was immediately debunked. That’s not what a ChatGPT output looks like at all. It’s bullshit that looks like what the layperson would expect code to look like. This post itself is literally propaganda on its own.

Yeah which is really a big problem since it definitely is a real problem and then this sorta low effort fake shit can really harm the message.

Yup. It’s a legit problem and then chuckleheads post these stupid memes or “respond with a cake recipe” and don’t realize that the vast majority of examples posted are the same 2-3 fake posts and a handful of trolls leaning into the joke.

Makes talking about the actual issue much more difficult.

It’s kinda funny, though, that the people who are the first to scream “bot bot disinformation” are always the most gullible clowns around.

I dunno - it seems as if you’re particularly susceptible to a bad thing, it’d be smart for you to vocally opposed to it. Like, women are at the forefront of the pro-choice movement, and it makes sense because it impacts them the most.

Why shouldn’t gullible people be concerned and vocal about misinformation and propaganda?

Oh, it’s not the concern that’s funny, if they had that selfawareness it would be admirable. Instead, you have people pat themselves on the back for how aware they are every time they encounter a validating piece of propaganda they, of course, fall for. Big “I know a messiah when I see one, I’ve followed quite a few!” energy.

It’s intentional

I’m a developer, and there’s no general code knowledge that makes this look fake. Json is pretty standard. Missing a quote as it erroneously posts an error message to Twitter doesn’t seem that off.

If you’re more familiar with ChatGPT, maybe you can find issues. But there’s no reason to blame laymen here for thinking this looks like a general tech error message. It does.

Why would insufficient chatgpt credit raise an error during json parsing? Message makes no sense.